AI-Powered Brain Computer Interface Co-pilot Offers New Autonomy for People with Paralysis

Scientists at the University of California – Los Angeles (UCLA) have developed an AI-powered “co-pilot” to dramatically improve assistive devices for people with paralysis. The research, conducted in the Neural Engineering and Computation Lab led by Professor Jonathan Kao with student developer Sangjoon Lee, tackles a major issue with non-invasive, wearable brain-computer interfaces (BCIs): “noisy” signals. This means the specific brain command (the “signal”) is very faint and gets drowned out by all the other electrical brain activity (the “noise”), much like trying to hear a whisper in a loud, crowded room. This low signal-to-noise ratio has made it difficult for users to control devices with precision.

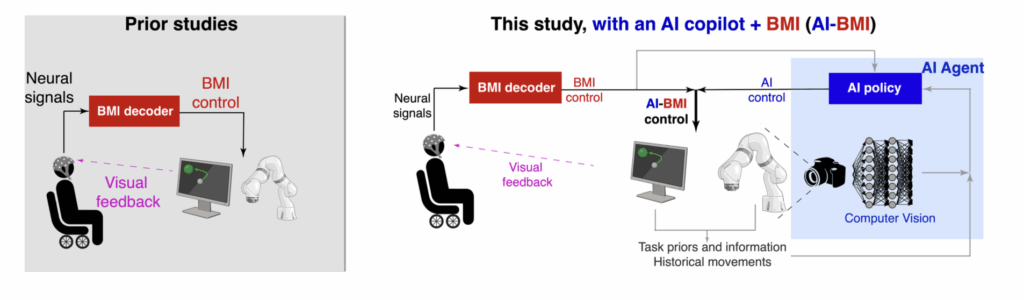

The team’s breakthrough solution is a concept called shared autonomy. Instead of only trying to decipher the user’s “noisy” brain signals, the AI co-pilot also acts as an intelligent partner by analyzing the environment, using data like a video feed of the robotic arm. By combining the user’s likely intent with this real-world context, the system can make a highly accurate prediction of the desired action. This allows the AI to help complete the movement, effectively filtering through the background noise that limited older systems.

The results of this new approach are remarkable. In lab tests, participants using the AI co-pilot to control a computer cursor and a robotic arm saw their performance improve by nearly fourfold. This significant leap forward has the potential to restore a new level of independence for individuals with paralysis. By making wearable BCI technology far more reliable and intuitive, it could empower users to perform complex daily tasks on their own, reducing their reliance on caregivers.

Leave a comment