Sony’s “Intelligent Vision” Can Read Lips And Possibly Boost Accessibility In Future

As we get more advanced technology in our daily lives, we see many possibilities that were once considered science fiction, the advent of artificial intelligence in everyday objects being a fine example of that. We are seeing AI embedded in products that we use regularly, making them smarter and improving their potential.

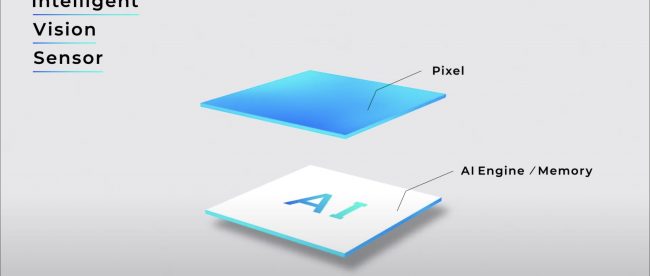

At CES 2021, Sony gave everyone an overview of their Visual Speech Enablement technology that uses camera sensors with artificial intelligence built into them to detect a face, isolate that person’s lips, identify lip movement, and translate them into words. Since this technique is focusing on the moving lips only, foreground and background noise isn’t really an issue. What’s also interesting is that since it’s looking at the lips using a camera, it doesn’t even require a microphone!

Sony has some initial use cases in mind like factory automation, voice enabled ATMs, and kiosks to implement this technology. Currently, this technology is optimized to be used on computers though it may be available on phones in the future. Of course, it has many uses in accessibility, like reading lips to improve auto-generated captions or reduce the need for a relay operator amongst many other. Sony thinks this Visual Speech Enablement is not ready for that yet but could be in the future.

To learn more about Visual Speech Enablement using Intelligent Vision Sensors, watch this video below. What other use cases can you think of where detecting lip movement and recognizing words using cameras can be used?

Source: PC Mag

Additional reading on Intelligent Vision: Sony

Leave a comment